Anomaly Detection using Autoencoders and Generative Adversarial Networks (GANs) for Cybersecurity over Network Traffic

10/31/2021

Information (data) being generated from infrastructural, industrial, and consumer devices are progressively being digitised, stored, and processed in many ways and for various applications. Moreover, electronic systems embedded in critical infrastructure (e.g., power stations), industrial processes (e.g., robotic assembly lines), and consumer goods (e.g., autonomous vehicles) are increasingly being linked together in various layers of network connectivity frameworks (e.g., IoT, Industry 5.0, etc.). As this new reality of big data accumulation and digital transformation is being established, in recent years there has been an exponential increase of attacks against governments, organisations and individuals who hold and process this type of data, manage critical facilities, or even use electronic goods. Collectively referred as “cyberattacks” and typically categorised in various ways such as Denial of Service (DoS), ransomware, phishing, and malware amongst many others, these malicious incursions have grown in sophistication and impact, and are manifested in several ways depending on the physical and digital infrastructural networks involved. Focusing on the Industrial Internet of Things and the Smart Grid (SG) technologies, that provide substantial advantages, such as self-healing, pervasive control and improved utilisation of resources. However, the evolution of the smart technologies introduces severe cybersecurity issues due to (a) the new attack surface introduced by the smart technologies, (b) the vulnerability nature of the TCP/IP protocol and (c) the presence of legacy systems, such as Industrial Control Systems and Supervisory Control and Data Acquisition.

Cyber-attacks lead to system failures, release of sensitive information or restrict people from accessing critical infrastructure. In the past such cyber-attacks were identified and processed by experts and trained personnel using specialised tools and manual processes to detect suspicious activity over the network traffic. Recently, solutions based on machine learning (ML) and deep learning (DL) have been introduced and have the potential to make these cyber watchdogs even more effective and efficient. If a DL model could identify anomalous packets of data traveling through a network autonomously and accurately, then human security professionals would waste less time sifting through network traffic alerts and log files.

The detection of anomalies or outliers over the network traffic is one of the classic approaches used to identify cyber-attacks in real time. The current ML techniques for outlier detection in cybersecurity include mainly unsupervised solutions. One of the main reasons is due to the available data. In other ML problems such as object detection and recognition data are available and are well balanced among all the supported types. In the case of anomaly detection the amount of normal data is significantly higher compared to the ones with anomalies (i.e. with cyber-attacks). Another reason explaining the need of unsupervised solutions is that these methods allow the detection of attacks that have not been discovered or recorded in the past.

Unsupervised methods are based on clustering and they are defined as a division of data into group of similar objects. It is expected that each cluster, consists of similar objects and dissimilar to objects in other clusters. There are various methods to perform clustering that can be applied for the anomaly detection. Following is the description of some of the proposed approaches:

- k-Means clustering is a cluster analysis method where we define k disjoint clusters on the basis of the feature value of the objects to be grouped. Here, k is the user defined parameter and the obtained cluster centroids are then used for fast anomaly detection for the new acquired data. During the training stage given a data sample of n-dimensions the methods determines a clustering of segment points, and the corresponding centres or centroids of the clusters. These cluster centroids provide library of "normal" data sample shapes. During the testing stage the method tries to reconstruct the given new sample data using cluster centroids learned during training. Poor reconstruction error on an individual segment indicates an anomaly.

- Stochastic Outlier Selection - Affinity-based outlier selection (SOS) is an unsupervised outlier-selection algorithm that takes as input either a feature matrix or a dissimilarity matrix and outputs for each data point an outlier probability. Intuitively, a data point is considered to be an outlier when the other data points have insufficient affinity with it.

- One of the classic approaches used for anomaly detection is the One Class Support Vector Machine (OC-SVM), which estimates the hyperplane that separates the anomalous data from the normal (origin) with the greatest distance. This approach offers high accuracy and efficiency on noisy cybersecurity network traffic data, but the complexity and processing time requirements increase for high dimensional data.

- The Isolation Forest (IF) algorithm deliberately “overfitting” models aiming to detect anomalies. Considering that outliers have more empty space around them, they take less steps to memorize. The IF is using decision trees and outliers are regarded the points with lower path length. First we measure the path length between the root and each data point (leaf) and we compare them with the average path length. This path it is expected be relatively short for the outliers.

- One Class Deep Neural Networks: Anomaly detection methods based on One Class DNNs are categorised either as “mixed” or “fully deep”. In mixed approaches, representations are learned separately in a preceding step before these representations are then fed into classical AD methods like the Isolation Forest or OC-SVM. Fully deep approaches, in contrast, employ the representation learning objective directly for detecting anomalies. In general all existing deep AD approaches rely on the reconstruction error with deep autoencoders and Generative Adversarial Networks (GANs) to be the main approaches used for deep AD.

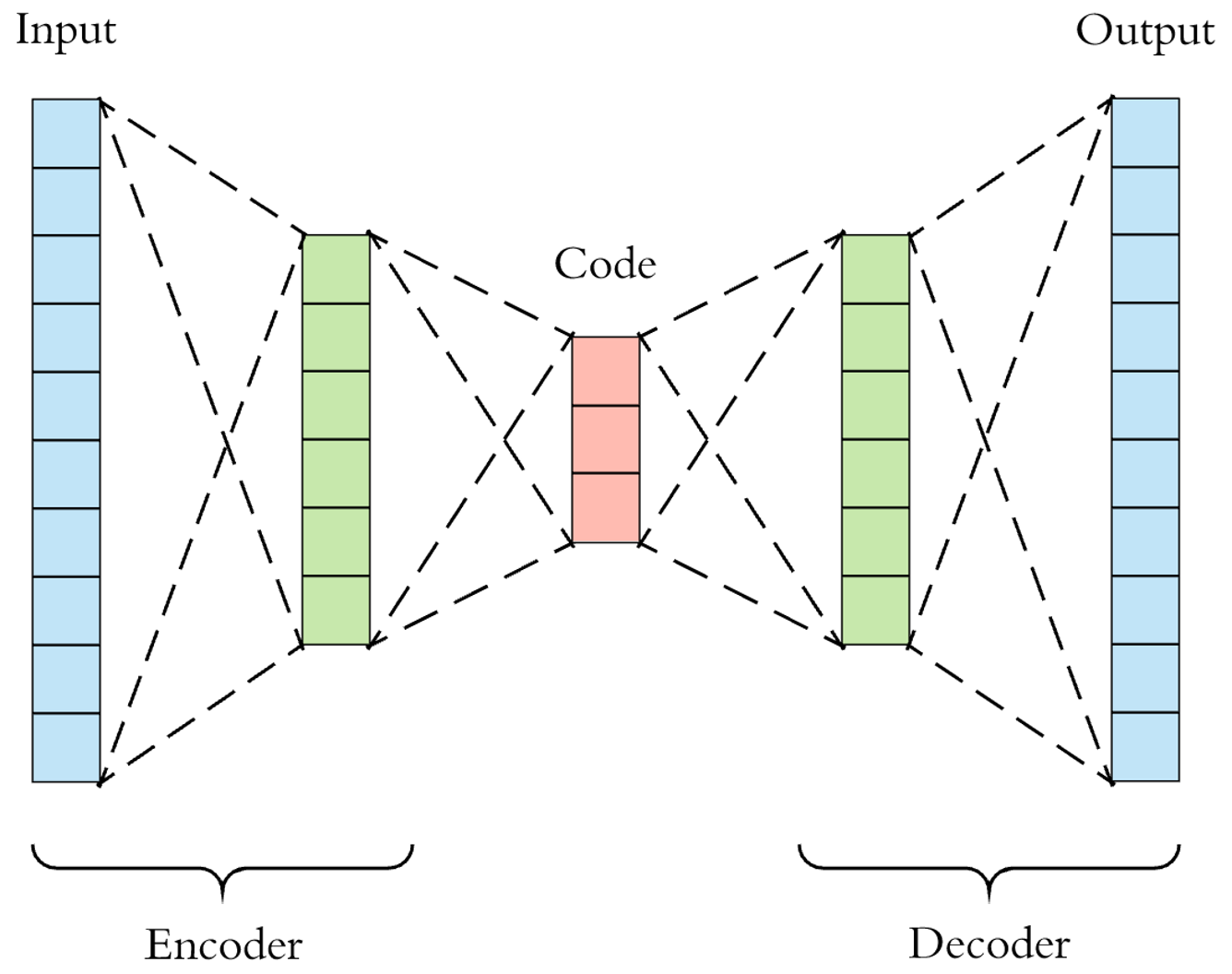

Autoencoders is a type of deep neural networks which aim to find the identity function by producing an intermediate representation of lower dimensionality. The training process of these deep networks involves an error minimization procedure. Consequently autoencoders remove the main variation factors from normal samples and then provide an accurate reconstruction, but for samples that are not normal and therefore these common factors are not present the reconstruction accuracy is very low. As a result autoencoders can be considered in mixed approaches, this includes the case of using the latent space into classical AD methods, but also by fully integrating them in deep architectures, by deploying as an anomaly score the reconstruction error. Autoencoders do not have as an objective the detection of anomalies but to provide mechanisms for dimensionality reduction. Therefore, one of the main challenges is to identify the right level of compression or number of dimensions. This process of selecting the right level of compactness is tricky due to their unsupervised nature and the unknown intrinsic dimensionality of the data.

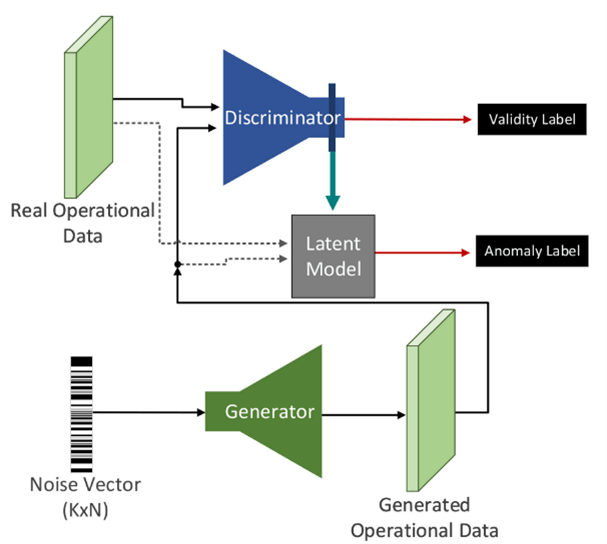

Apart from auto encoders, a novel deep AD method based on Generative Adversarial Networks (GANs) have been proposed in SPEAR called MENSA (anoMaly dEtection aNd claSsificAtion). The concept if this method follows the principles of GANs and it trains a GAN to generate sample data according to the provided normal training dataset. During the testing stage it aims to reconstruct a given sample from the generator’s latent space that is closest to the provided test input. Therefore, if the GAN has learned the distribution of the training normal samples then given samples should result an accurate representation in the latent space if they are normal but the reconstruction will be poor for anomalous samples.

The proposed model introduces new concepts and it combines simultaneously two Deep Neural Networks (DNNs): (a) autoencoder and (b) Generative Adversarial Network (GAN). We validated the efficiency of MENSA with a variety of datasets including Modbus/TCP, DNP3 network flows, and operational records (i.e., time-series of electricity measurements). This architecture is achieved by encapsulating the autoencoder model into the GAN network. The Generator becomes the Decoder, while the Discriminator is structured as the Encoder. This model is utilised for the anomaly detection procedure and it comprises the input to the latent layer. In particular, it is used to perform dimensionality reduction to the latent space. At this point, the Generator-Decoder has learned to generate close to real data that imitates the normal samples. To calculate the anomaly score for the real sample, the Adversarial Loss function is utilised. The Adversarial Loss is the difference between the generated and the real sample.

The next generation Electrical Grid, commonly known as Smart Grid, offer advantages (two-way power flow, self-monitoring, etc.) and challenges (e.g. cybersecurity concerns) in society. In this project, we implemented anomaly detection models capable of detecting more than 15 Modbus/TCP and DNP3 cyberattacks combined and potential anomalies related to operational data. The proposed solution MENSA combines two DNNs, an Autoencoder and a GAN, with a novel minimisation function, considering both the adversarial error and the reconstruction difference. The efficiency of MENSA was validated in several Smart Grid environments and was compared with other state of the art solutions and DL architectures. Our future plans include the design of more advanced DL models able to support other ICS/SCADA protocols, (Ether-Cat, Profinet, etc.) and detect cyberattacks against them. Also, optimisation mechanisms are considered and will be investigated aiming to improve the performance, inference time and model size.